Meta introduces new feature to tag images created by AI

Discover how Meta is stepping up to label AI-generated images across social media, ensuring transparency in the digital realm.

Navigating the New Frontier of AI-Generated Media

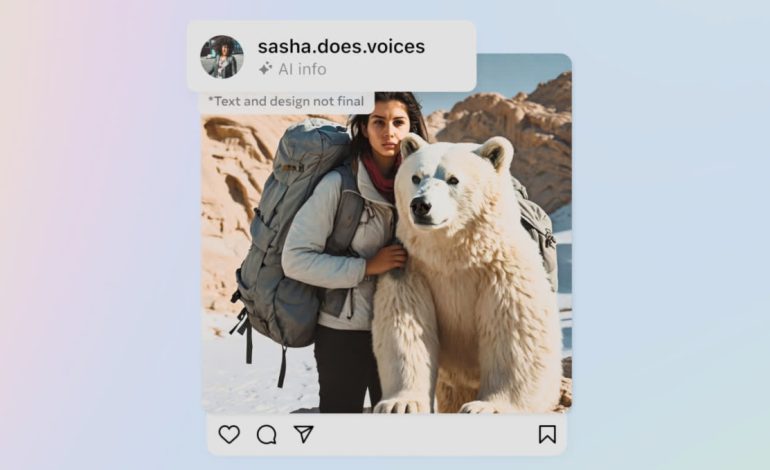

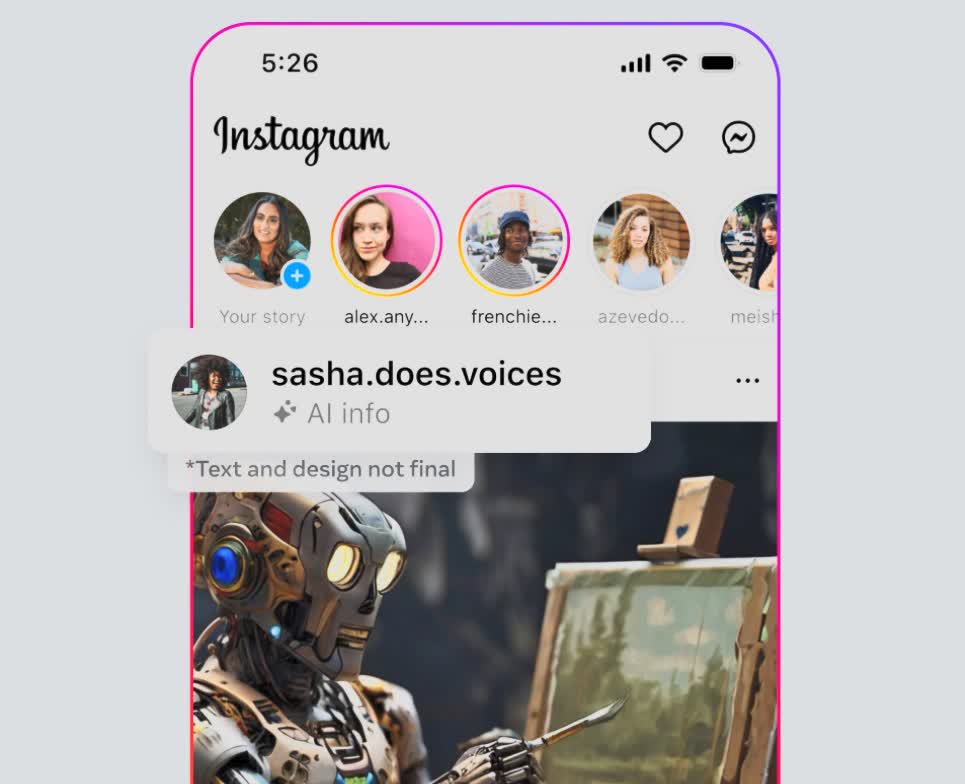

In the realm of social media, the proliferation of AI-generated images is a growing concern, with many of these creations being falsely presented as genuine. At AI Media Cafe, we’ve learned that Meta is stepping up its efforts to address this issue by implementing a system to detect and label such content across its platforms, including Facebook, Instagram, and Threads. This initiative extends beyond Meta’s own AI-generated images to encompass those produced by competing services.

Meta’s approach to transparency

Meta’s President of Global Affairs, Nick Clegg, has indicated that the company is not only labeling its own AI-generated images with “Imagined with AI” but is also seeking to apply similar labels to content from other generative AI services. This is part of a broader effort to work with various companies to establish universal standards for marking AI-generated content. Meta’s AI-generated images are currently marked with visible cues, covert watermarks, and embedded metadata to facilitate their identification.

Collaborative efforts for content identification

The social media behemoth is collaborating with tech giants like Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock. These companies are in the process of incorporating metadata into their images, which will allow Meta to identify and label them appropriately when they appear on its platforms. Clegg emphasized the importance of user awareness regarding AI-generated content, noting that platform users value knowing when they are viewing content created by AI.

Expanding labeling to audio and video

While Meta is enhancing its ability to tag AI-generated images, Clegg acknowledged that labeling AI-created audio and video content is more challenging due to the complexity of embedding markers. Nevertheless, Meta is introducing a feature that prompts users to disclose if they are sharing AI-generated audio or video, enabling the company to label it accordingly. This disclosure is also required for organic content featuring photorealistic videos or realistic audio that has been digitally crafted or modified. Non-compliance with this disclosure requirement may result in penalties from Meta.

Addressing high-risk deceptive content

Meta is particularly focused on content that poses a significant risk of deceiving the public on critical issues. Such content will be given a more conspicuous label to alert users to its artificial nature. Moreover, Meta is developing classifiers to automatically detect AI-generated content, even in the absence of invisible markers, and is exploring methods to prevent the alteration or removal of these watermarks.

The broader context of AI media

As elections draw near, the urgency to identify misleading AI-generated content is intensifying. Microsoft has previously highlighted the use of AI imagery from China aimed at influencing American voters. A recent incident involved a robocall in New Hampshire, featuring a message that mimicked President Biden’s voice, urging residents not to vote. This message was likely generated by a text-to-speech engine from ElevenLabs, prompting the FCC to propose a ban on AI voices in robocalls.

AI Media Cafe is committed to keeping you informed on the latest developments in AI media technology and the measures being taken to ensure transparency and authenticity in the digital space. As Meta and other companies continue to innovate and adapt, we will provide updates on how these changes impact the landscape of social media and beyond.